Welcome to the brave new world of data, a world that is not just evolving but also actively being reshaped by remarkable technologies.

It is a realm where our traditional understanding of data is continuously being challenged and transformed, paving the way for revolutionary methodologies and innovative tools.

Among these cutting-edge technologies, two stand out for their potential to dramatically redefine our data-driven future: Generative AI and Synthetic Data.

In this blog post, we will delve deeper into these fascinating concepts.

We will explore what Generative AI and Synthetic Data are, how they interact, and most importantly, how they are changing the data landscape.

So, strap in and get ready for a tour into the future of data.

Understanding Generative AI and Synthetic data

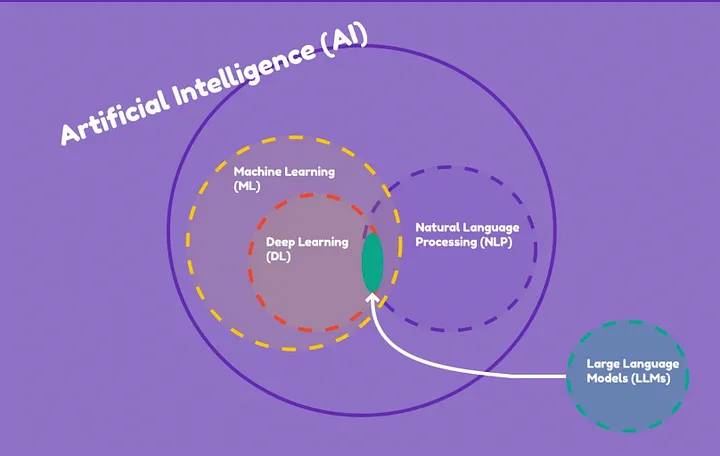

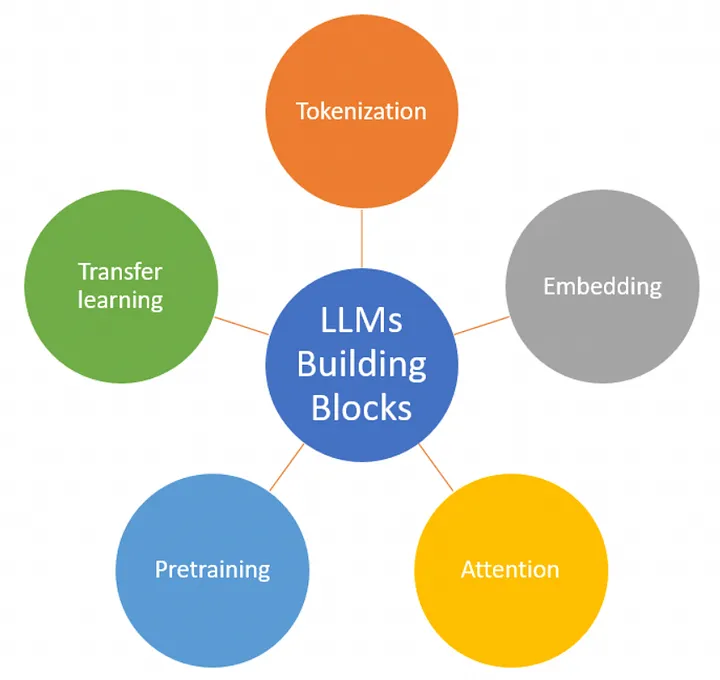

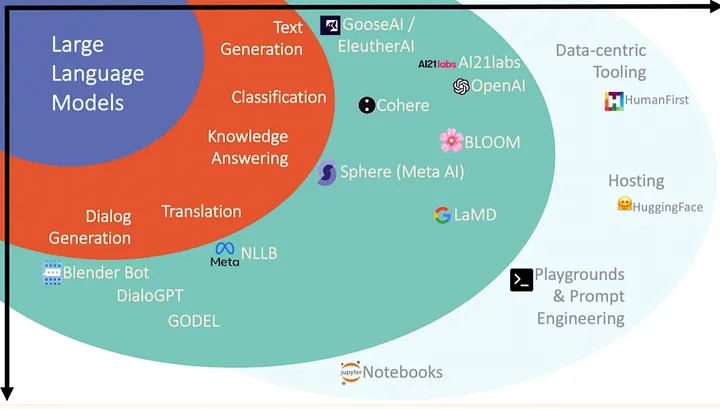

Generative AI refers to a subset of artificial intelligence, particularly machine learning, that uses algorithms like Generative Adversarial Networks (GANs) to create new content. It’s ‘generative’ because it can generate something new and unique from random noise or existing data inputs, whether that be an image, a piece of text, data, or even music.

GAN’s are powerful algorithms comprise two neural networks — the generator, which produces new data instances, and the discriminator, which evaluates them for authenticity. Over time, the generator learns to create more realistic outputs.

Today, the capabilities of Generative AI have evolved significantly, with models like OpenAI’s GPT-4 showcasing a staggering potential to create human-like text. The technology is being refined and optimized continuously, making the outputs increasingly indistinguishable from real-world data.

Synthetic data refers to artificially created information that mimics the characteristics of real-world data but does not directly correspond to real-world events. It is generated via algorithms or simulations, effectively bypassing the need for traditional data collection methods.

In our increasingly data-driven world, the demand for high-quality, diverse, and privacy-compliant data is soaring.

Current challenges with real data

Across industries, companies are grappling with data-related challenges that prevent them from unlocking the full potential of artificial intelligence (AI) solutions.

These hurdles can be traced to various factors, including regulatory constraints, sensitivity of data, financial implications, and data scarcity.

Regulations:

Data regulations have placed strict rules on data usage, demanding transparency in data processing. These regulations are in place to protect the privacy of individuals, but they can significantly limit the types and quantities of data available for developing AI systems.

Sensitive Data:

Moreover, many AI applications involve customer data, which is inherently sensitive. The use of production data poses significant privacy risks and requires careful anonymization, which can be a complex and costly process.

Financial Implications:

Financial implications add another layer of complexity. Non-compliance with regulations can lead to severe penalties.

Data Availability:

Furthermore, AI models typically require vast amounts of high-quality, historical data for training. However, such data is often hard to come by, posing a challenge in developing robust AI models.

This is where synthetic data comes in.

Synthetic data can be used to generate rich, diverse datasets that resemble real-world data but do not contain any personal information, thus mitigating any compliance risks. Additionally, synthetic data can be created on-demand, solving the problem of data scarcity and allowing for more robust AI model training.

By leveraging synthetic data, companies can navigate the data-related challenges and unlock the full potential of AI.

What is Synthetic Data?

Synthetic data refers to data that’s artificially generated rather than collected from real-world events. It’s a product of advanced deep learning models, which can create a wide range of data types, from images and text to complex tabular data.

Synthetic data aims to mimic the characteristics and relationships inherent in real data, but without any direct linkage to actual events or individuals.

A synthetic data generating solution can be a game-changer for complex AI models, which typically require massive volumes of data for training. These models can be “fed” with synthetically generated data, thereby accelerating their development process and enhancing their performance.

One of the key features of synthetic data is its inherent anonymization.

Because it’s not derived from real individuals or events, it doesn’t contain any personally identifiable information (PII). This makes it a powerful tool for data-related tasks where privacy and confidentiality are paramount.

As such, it can help companies navigate stringent data protection regulations, such as GDPR, by providing a rich, diverse, and compliant data source for various purposes.

In essence, synthetic data can be seen as a powerful catalyst for advanced AI model development, offering a privacy-friendly, versatile, and abundant alternative to traditional data.

Its generation and use have the potential to redefine the data landscape across industries.

Synthetic Data Use cases:

Synthetic data finds significant utility across various industries due to its ability to replicate real-world data characteristics while maintaining privacy.

Here are a few key use cases:

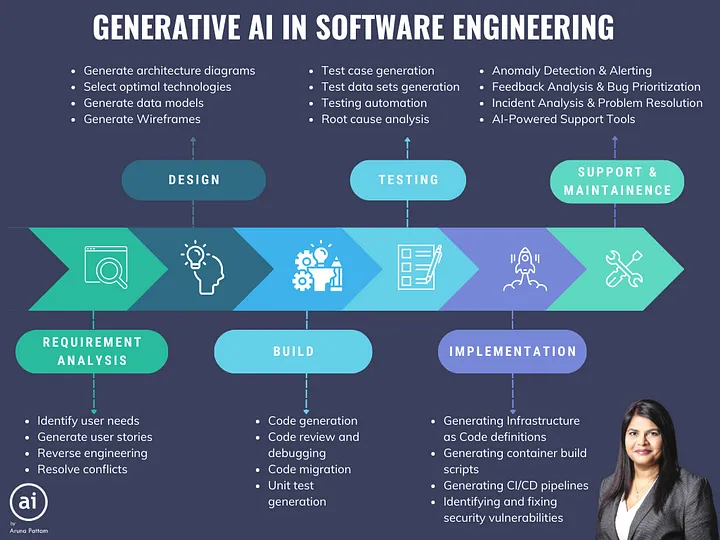

Testing and Development:

In Testing and Development, synthetic data can generate production-like data for testing purposes. This enables developers to validate applications under conditions that closely mimic real-world operations.

Furthermore, synthetic data can be used to create testing datasets for machine learning models, accelerating the quality assurance process by providing diverse and scalable data without any privacy concerns.

Health care:

The Health sector also reaps benefits from synthetic data. For instance, synthetic medical records or claims can be generated for research purposes, boosting AI capabilities without violating patient confidentiality.

Similarly, synthetic CT/MRI scans can be created to train and refine machine learning models, ultimately improving diagnostic accuracy.

Financial Services:

Financial Services can utilize synthetic data to anonymize sensitive client data, allowing for secure development and testing.

Moreover, synthetic data can be used to enhance scarce fraud detection datasets, improving the performance of detection algorithms.

Insurance:

In Insurance, synthetic data can be used to generate artificial claims data. This can help in modeling various risk scenarios and aid in creating more accurate and fair policies, while keeping the actual claimant’s data private.

These use cases are just the tip of the iceberg, demonstrating the transformative potential of synthetic data across industries.

Conclusion:

In conclusion, the dynamic duo of Generative AI and Synthetic Data is set to transform the data landscape as we know it.

As we’ve seen, these technologies address critical issues, ranging from data scarcity and privacy concerns to regulatory compliance, thereby unlocking new potentials for AI development.

The future of Synthetic Data is promising, with an ever-expanding range of applications across industries. Its ability to provide an abundant, diverse, and privacy-compliant data source could be the key to unlocking revolutionary AI solutions and propelling us towards a more data-driven future.

As we continue to explore the depths of these transformative technologies, we encourage you to delve deeper and stay informed about the latest advancements.

Remember, understanding and embracing these changes today will equip us for the data-driven challenges and opportunities of tomorrow.